EHTrack: Earphone-Based Head Tracking via Only Acoustic Signals

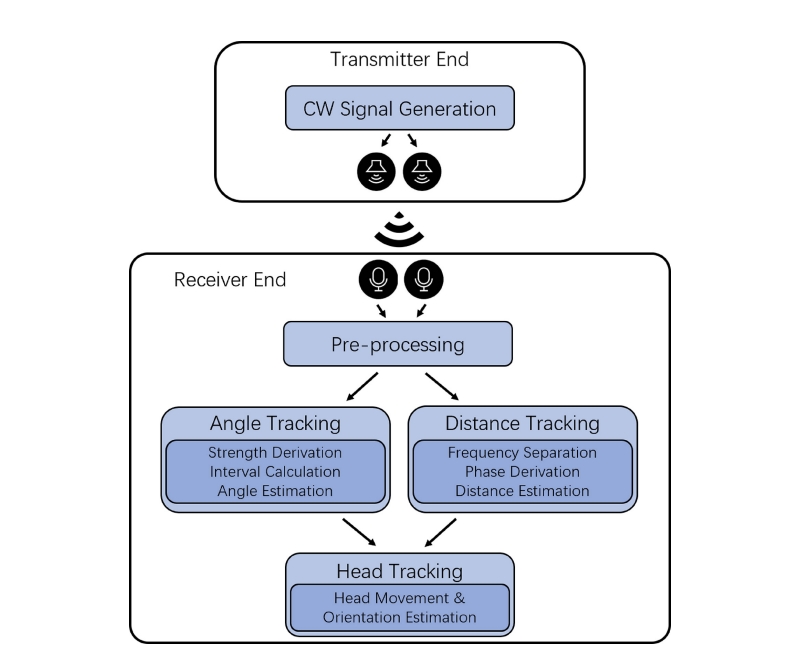

Head tracking is a technique that allows for the measurement and analysis of human focus and attention, thus enhancing the experience of human–computer interaction (HCI). Nevertheless, current solutions relying on vision and motion sensors exhibit limitations in accuracy, user-friendliness, and compatibility with the majority of commercial off-the-shelf (COTS) devices. To overcome these limitations, we present EHTrack, an earphone-based system that achieves head tracking exclusively through acoustic signals. EHTrack employs acoustic sensing to measure the movement of a pair of earphones, subsequently enabling precise head tracking. In particular, a pair of speakers

generates a periodically fluctuating sound field, which the user’s two earphones detect. By assessing the distance and angle alterations between the earphones and speakers, we propose a model to determine the user’s head movement and orientation. Our evaluation results indicate a high degree of accuracy in both head movement tracking, with an average tracking error of 2.98 cm, and head orientation tracking, with an average error of 1.83◦. Furthermore, in a deployed exhibition scenario, we attained an accuracy of 89.2% in estimating the user’s focus direction.