Few-Shot Adaptation to Unseen Conditions for Wireless-based Human Activity Recognition without Fine-tuning

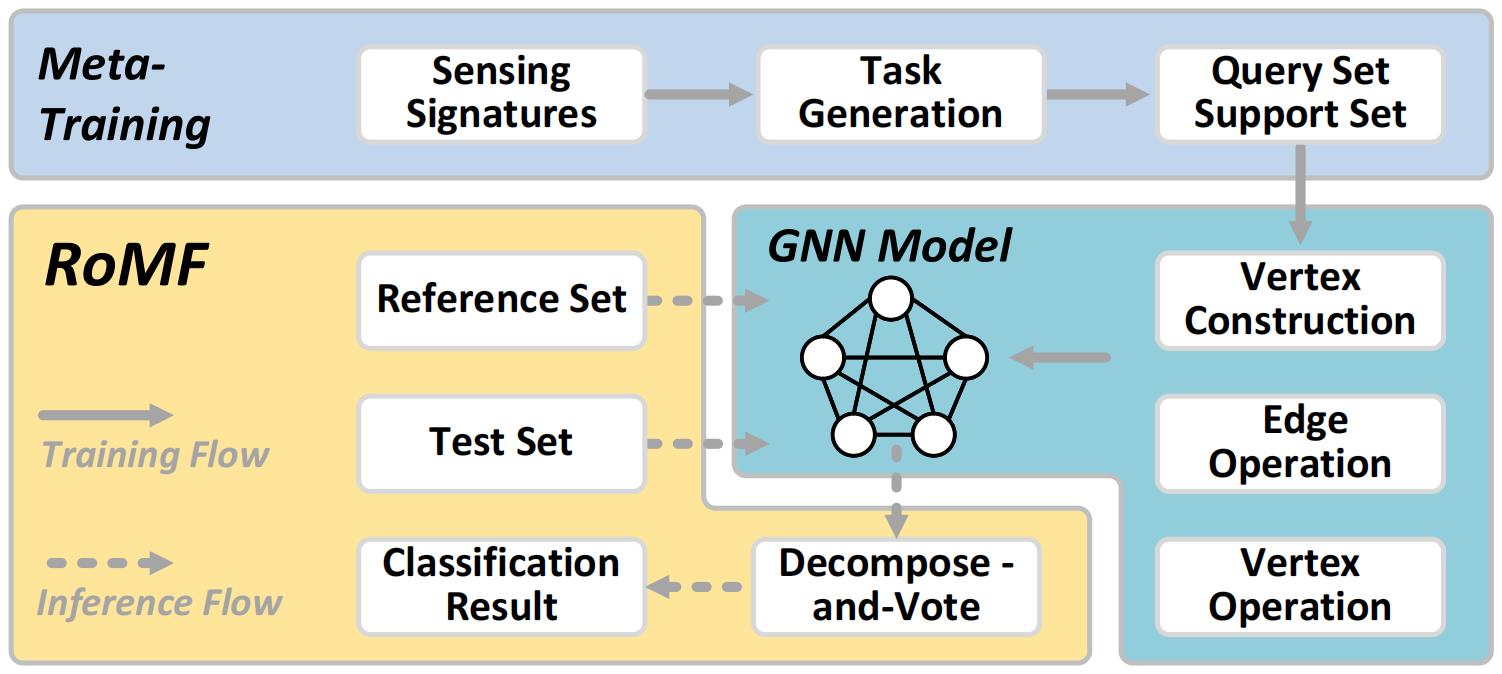

Wireless-based human activity recognition (WHAR) enables various promising applications. However, since WHAR is sensitive to changes in sensing conditions (e.g., different environments, users, and new activities), trained models often do not work well under new conditions. Recent research uses meta-learning to adapt models. However, they must fine-tune the model, which greatly hinders the widespread adoption of WHAR in practice because model fine-tuning is difficult to automate and requires deep-learning expertise. The fundamental reason for model fine-tuning in existing works is because their goal is to find the mapping relationship between data samples and corresponding activity labels. Since this mapping reflects the intrinsic properties of data in the perceptual scene, it is naturally related to the conditions under which the activity is sensed. To address this problem, we exploit the principle that under the same sensing condition, data of the same activity class are more similar (in a certain latent space) than data of other classes, and this property holds invariant across different conditions. Our main observation is that meta-learning can actually also transform WHAR design into a learning problem that is always under similar conditions, thus decoupling the dependence on sensing conditions. With this capability, general and accurate WHAR can be achieved, avoiding model fine-tuning. In this paper, we implement this idea through two innovative designs in a system called RoMF. Extensive experiments using FMCW, Wi-Fi and acoustic three sensing signals show that it can achieve up to 95.3% accuracy in unseen conditions, including new environments, users and activity classes.