DeepBreath: Breathing Exercise Assessment with a Depth Camera

Practicing breathing exercises is crucial for patients with chronic obstructive pulmonary disease (COPD) to enhance lung

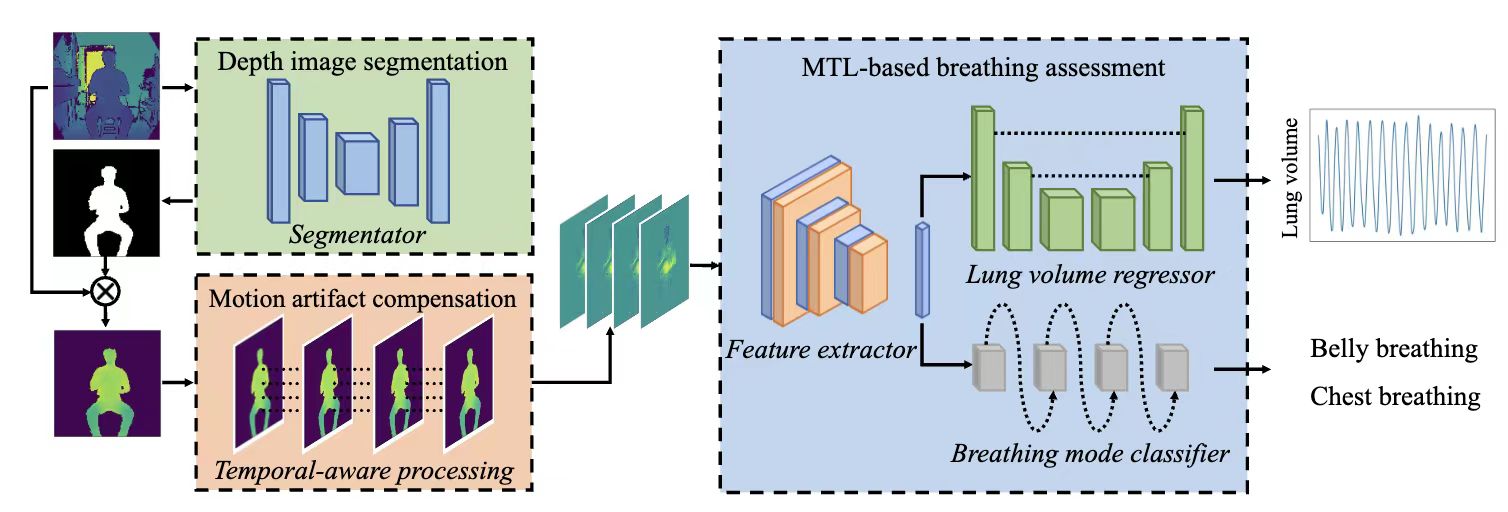

function. Breathing mode (chest or belly breathing) and lung volume are two important metrics for supervising breathing exercises. Previous works propose that these metrics can be sensed separately in a contactless way, but they are impractical with unrealistic assumptions such as distinguishable chest and belly breathing patterns, the requirement of calibration, and the absence of body motions. In response, this research proposes DeepBreath, a novel depth camera-based breathing exercise assessment system, to overcome the limitations of the existing methods. DeepBreath, for the first time, considers breathing mode and lung volume as two correlated measurements and estimates them cooperatively with a multitask learning framework. This design boosts the performance of breathing mode classification. To achieve calibration-free lung volume measurement, DeepBreath uses a data-driven approach with a novel UNet-based deep-learning model to achieve one-model-fit-all lung volume estimation, and it is designed with a lightweight silhouette segmentation model with knowledge transferred from a state-of-the-art large segmentation model that enhances the estimation performance. In addition, DeepBreath is designed to be resilient to involuntary motion artifacts with a temporal-aware body motion compensation algorithm. We collaborate with a clinical center and conduct experiments with 22 healthy subjects and 14 COPD patients to evaluate DeepBreath. The

experimental result shows that DeepBreath can achieve high breathing metrics estimation accuracy but with a much more realistic setup compared with previous works.