EyeGesener: Eye Gesture Listener for Smart Glasses Interaction Using Acoustic Sensing

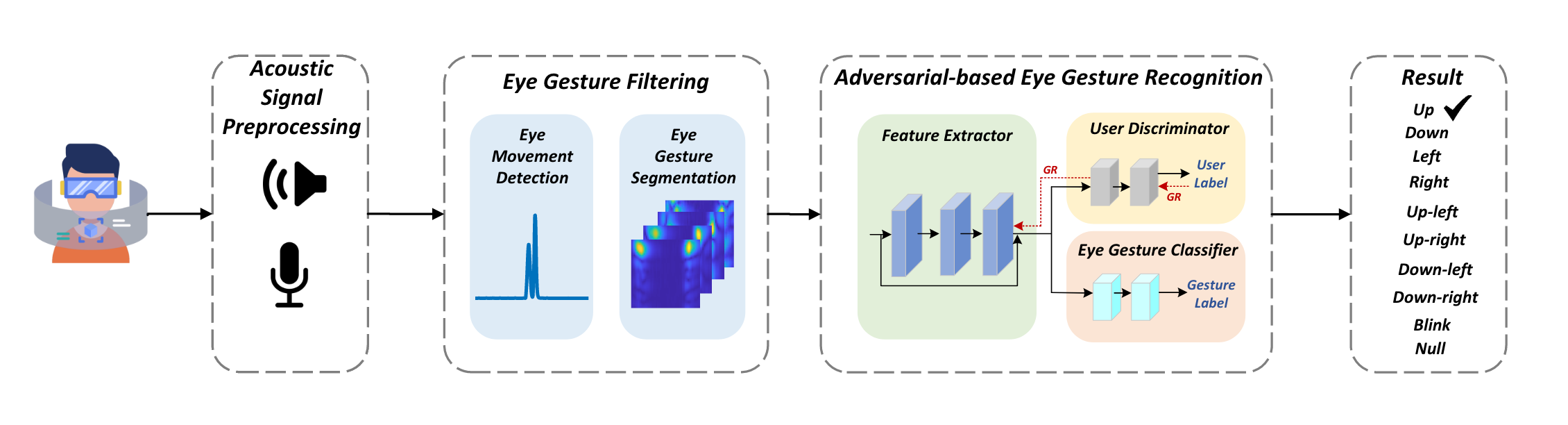

The smart glasses market has witnessed significant growth in recent years. The interaction of commercial smart glasses mostly relies on the hand, which is unsuitable for scenarios where both hands are occupied. In this paper, we propose EyeGesener, an eye gesture listener for smart glasses interaction using acoustic sensing. To mitigate the Midas touch problem, we meticulously design eye gestures for interaction as two intentional consecutive saccades in a specific direction without visual dwell. The proposed system is a glass-mounted acoustic sensing system with two pairs of commercial speakers and microphones to sense eye gestures. To capture the subtle movements of the eyelid and surrounding skin induced by eye gestures, we design an Orthogonal Frequency Division Multiplexing (OFDM)-based channel impulse response (CIR) estimation schema that allows two speakers to transmit at the same time and in the same frequency band without collision. We implement eye gesture filtering and adversarial-based eye gesture recognition to identify eye gestures for interaction, filtering out daily eye movements. To address the differences in eye size and facial structure among different users, we employ adversarial training to achieve user-independent eye gesture recognition. We evaluate the performance of our system through experiments on data collected from 16 subjects. The experimental result shows that our system can recognize eight eye gestures with an average F1-score of 0.93, and the false alarm rate of our system is 0.03. We develop an interactive real-time audio-video player based on EyeGesener and then conduct a user study. The result demonstrates the high usability of the proposed system.