RimSense: Enabling Touch-based Interaction on Eyeglass Rim Using Piezoelectric Sensors

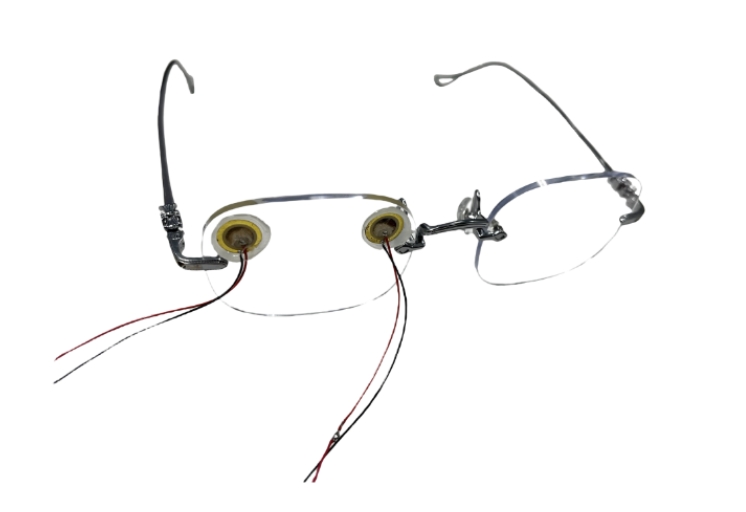

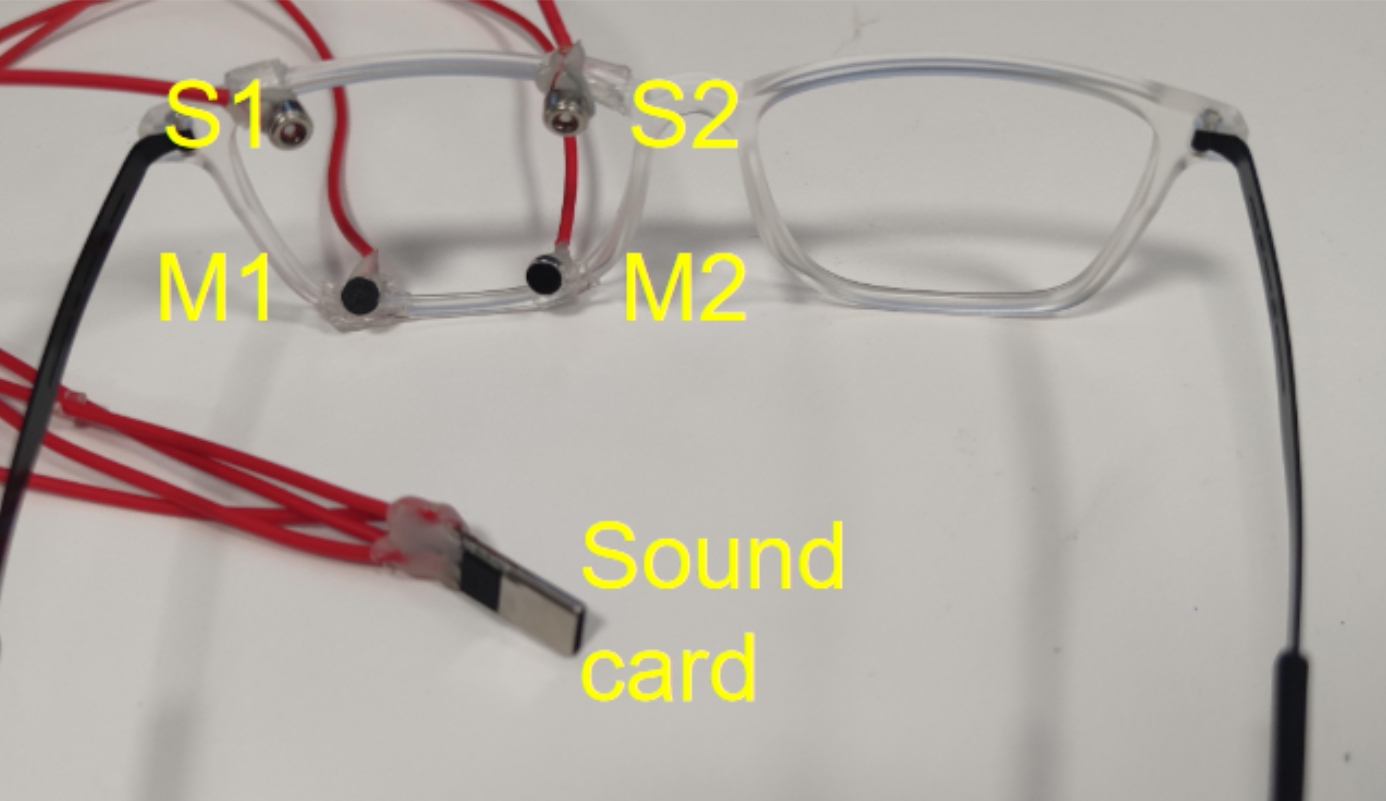

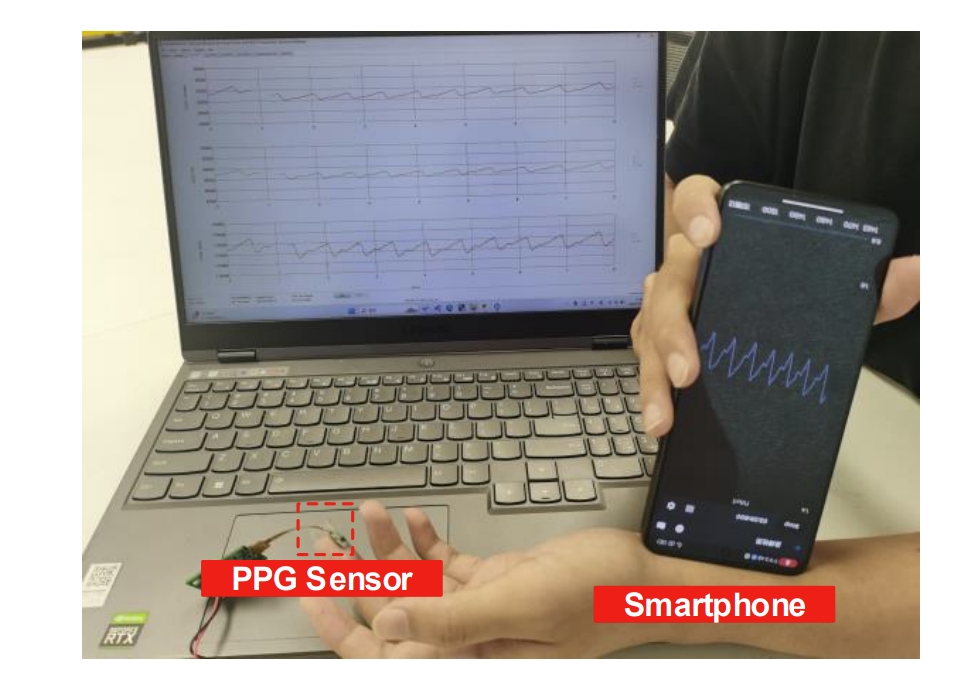

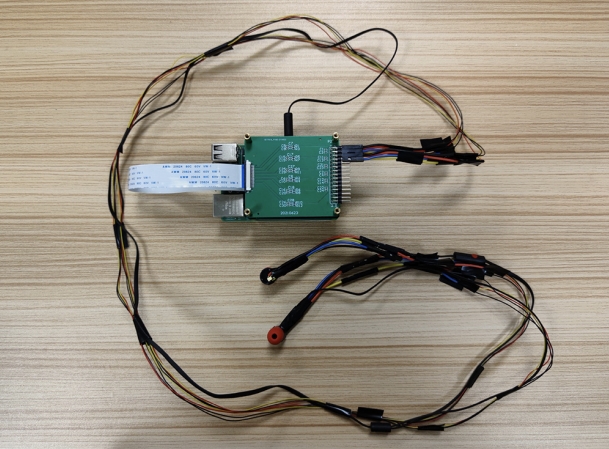

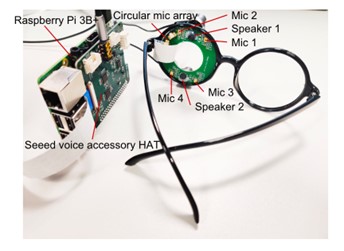

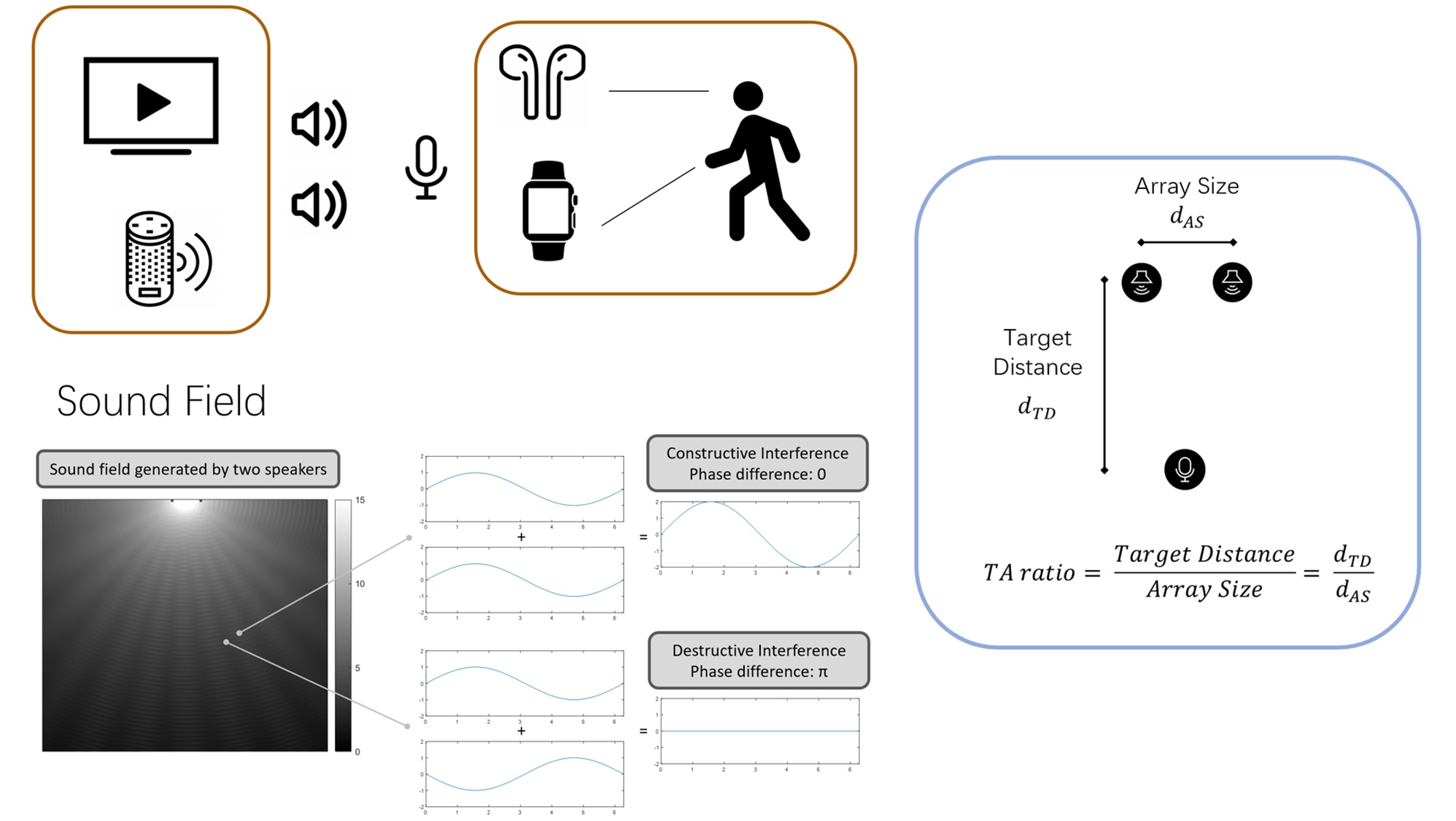

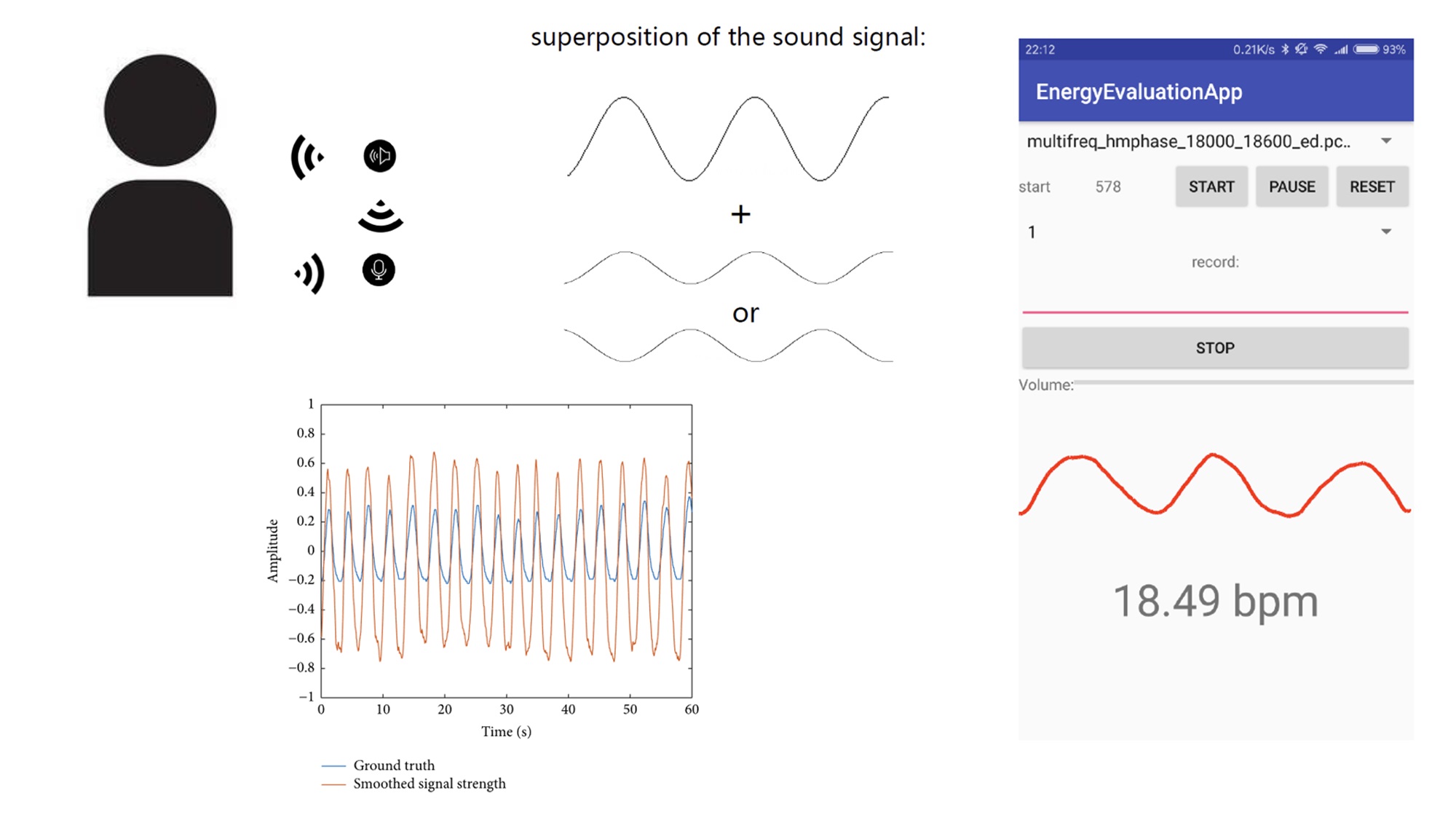

Smart eyewear’s interaction mode has attracted significant research attention. While most commercial devices have adopted touch panels situated on the temple front of eyeglasses for interaction, this paper identifies a drawback stemming from the unparalleled plane between the touch panel and the display, which disrupts the direct mapping between gestures and the manipulated objects on display. Therefore, this paper proposes RimSense, a proof-of-concept design for smart eyewear, to introduce an alternative realm for interaction - touch gestures on eyewear rim. RimSense leverages piezoelectric (PZT) transducers to convert the eyeglass rim into a touch-sensitive surface. When users touch the rim, the alteration in the eyeglass’s structural signal manifests its effect into a channel frequency response (CFR). This allows RimSense to recognize the executed touch gestures based on the collected CFR patterns. Technically, we employ a buffered chirp as the probe signal to fulfil the sensing granularity and noise resistance requirements. Additionally, we present a deep learning-based gesture recognition framework tailored for fine-grained time sequence prediction and further integrated with a Finite-State Machine (FSM) algorithm for event-level prediction to suit the interaction experience for gestures of varying durations. We implement a functional eyewear prototype with two commercial PZT transducers. RimSense can recognize eight touch gestures on the eyeglass rim and estimate gesture durations simultaneously, allowing gestures of varying lengths to serve as distinct inputs. We evaluate the performance of RimSense on 30 subjects and show that it can sense eight gestures and an additional negative class with an F1-score of 0.95 and a relative duration estimation error of 11%. We further make the system work in real-time and conduct a user study on 14 subjects to assess the practicability of RimSense through interactions with two demo applications. The user study demonstrates RimSense’s good performance, high usability, learnability and enjoyability. Additionally, we conduct interviews with the subjects, and their comments provide valuable insight for future eyewear design. Download PDF